Most SEO audit scores deliver confidence without understanding.

A neat dashboard, a 92/100 score, a colorful list of “critical issues”—all of it feels objective.

It feels like progress.

It feels like clarity.

But the feeling is misleading.

Google recently emphasized that SEO audit scores do not correlate with ranking systems or search performance.

This wasn’t a soft nudge—it was a direct warning:

“Don’t rely on SEO tool scores to understand or improve your rankings.”

This should have been a wake-up call.

Instead, most teams continued business as usual:

→ Fix what turns red into green

→ Chase a higher score

→ Assume the algorithm rewards cleanliness

It doesn’t.

The web is full of 95/100 pages that don’t rank, and 60/100 pages that dominate entire SERPs.

That gap exists because audit scores measure surface errors, while Google evaluates contextual usefulness.

And that is the hidden cost of relying on scores:

You focus on what’s easy to fix, not what changes your position in the market.

You polish the edges of your website while competitors rebuild the foundation.

You spend 30 hours deleting unused CSS when your real issue is intent mismatch.

You fix 22 “warnings” that have zero effect on impressions, UX, or semantic clarity.

You optimize the lighthouse—but not the search experience.

This is the core truth:

SEO performance isn’t determined by a checklist. It’s determined by context—your page, your niche, your competitors, your search intent, and your user behavior.

When you switch from score-first to context-first, priorities flip upside down.

Pages you ignored become high-leverage.

Issues you stressed about become irrelevant.

Three fixes outperform thirty.

This article is about making that strategic shift—away from numeric vanity, toward contextual decision-making that mirrors how search engines actually evaluate content.

This shift is the difference between doing SEO and understanding SEO.

It changes everything.

Concept Reframe (Deep, Expanded, System-Level Clarity)

Most SEO teams still operate on an outdated model of optimization:

Run an audit → collect issues → chase a higher score → expect rankings to rise.

This model feels logical because it mirrors how we understand grades, diagnostics, and performance metrics.

But SEO isn’t a classroom, and Google doesn’t reward clean report cards.

The old model is built on three flawed assumptions:

Assumption 1: A single score can summarize website health

Audit scores compress hundreds of variables into one number.

This flattening erases nuance.

A broken canonical and a missing image alt tag appear as equally “critical,” even though one disrupts indexation and the other changes nothing.

Assumption 2: Every site should fix the same issues

Audit tools apply the same rules to every domain.

Google doesn’t.

Search context varies by niche, intent, SERP structure, and competitive sophistication.

Your biggest issue may be something no audit tool can detect:

- misaligned content depth

- lack of topical authority

- fragmented internal links

- competing intents within a cluster

None of this fits neatly into a 0–100 score.

Assumption 3: Google evaluates websites the same way audits do

Audit tools score based on HTML patterns, speed metrics, accessibility notes, and technical cleanliness.

Google ranks based on how well a page satisfies the user’s task.

This includes:

- behavior patterns

- semantic coverage

- content quality

- query alignment

- experience flow

- competitive strength

- site structure context

- topical depth and interlinking

Audit scores don’t measure these.

Context does.

Why this matters now

Search is increasingly situational.

The same issue has different severity depending on:

- the page’s role

- the query’s intent

- the competitive landscape

- the user journey

- the topic cluster it belongs to

SEO is no longer checklist-driven.

It’s environment-driven.

To succeed, you need a system that understands contextual relevance, not cosmetic cleanliness.

And that’s where the old model collapses.

We need a replacement—something structured, practical, and aligned with modern search behavior.

So we introduce a new operational framework.

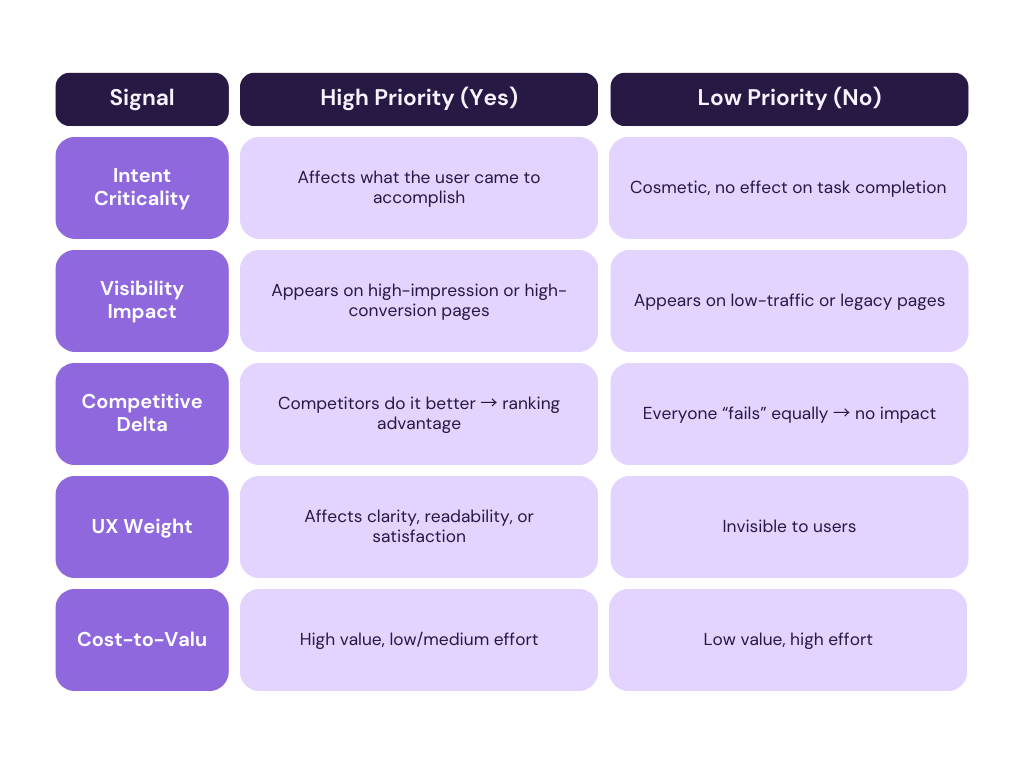

The Contextual SEO Decision System (CSDS)

Does this issue impact how well the page satisfies the searcher’s intent?

Intent is the ultimate filter.

If an issue affects how quickly or clearly a user gets what they came for, it’s high priority.

If it doesn’t, it drops to the bottom.

Examples:

- Misaligned header structure = high intent impact

- Outdated product content = high intent impact

- Missing alt text on a decorative icon = zero intent impact

This single signal eliminates ~50% of audit noise.

Signal 1 — Intent Criticality

Does this issue impact how well the page satisfies the searcher’s intent?

Intent is the ultimate filter.

If an issue affects how quickly or clearly a user gets what they came for, it’s high priority.

If it doesn’t, it drops to the bottom.

Examples:

- Misaligned header structure = high intent impact

- Outdated product content = high intent impact

- Missing alt text on a decorative icon = zero intent impact

This single signal eliminates ~50% of audit noise.

Signal 2 — Visibility Impact

Does the issue occur on pages that matter?

A “warning” on a page with 0 impressions is not the same as a warning on your highest-impression URL.

Visibility amplifies or suppresses severity.

Pages that need scrutiny:

- high-traffic clusters

- high-impression URLs

- pages ranking 8–20 (move-ready)

- commercial or conversion-critical pages

Pages that rarely need urgent fixes:

- paginated archives

- expired content

- deprecated pages

- low-impression legacy posts

Context assigns value.

Signal 3 — Competitive Delta

Does fixing this issue meaningfully differentiate you from competitors?

This is one of the most overlooked dimensions in SEO.

An issue matters only if it moves you above the competitive cluster.

If every competitor lacks schema, your missing schema is not a differentiator.

If every competitor loads in 2.4 seconds, improving to 1.8 seconds does not win rankings.

If competitors use intent-rich headers and you don’t, the delta becomes critical.

Audit tools don’t measure competitive context.

CSDS does.

Signal 4 — User Experience Weight

Does the issue change how humans read, navigate, or trust the page?

Google’s evaluation is behavior-informed.

UX issues that affect human interaction usually ripple into SEO performance via:

- time to answer

- scroll depth

- pogo-sticking

- first impressions

- readability

- information architecture clarity

Examples of high-impact UX issues:

- confusing first screen

- dense paragraphs

- weak content hierarchy

- no anchor links on long pages

Examples of low-impact UX issues:

- color contrast warnings on non-critical elements

- minor layout shifts below the fold

User experience weight separates cosmetic bugs from experiential friction.

Signal 5 — Cost-to-Value Ratio

Is the fix worth the effort?

This final signal turns strategy into operations.

Every SEO team has finite bandwidth.

You cannot — and should not — fix everything.

The cost-to-value signal forces a trade-off conversation:

- Does this require engineering time?

- Does it require rewriting large sections of content?

- Does it require restructuring templates?

- Does it require updating dozens of pages?

If the value is low relative to cost, the issue stays unfixed.

Audit tools ignore cost.

CSDS makes it central.

How CSDS Replaces Score-Chasing

A score-focused mindset asks:

“What’s wrong with the website?”

CSDS asks:

“What matters enough to change our ranking trajectory?”

That simple shift—from problems to priorities—is the difference between busywork and progress.

How to Apply CSDS in Real SEO Workflows

The Contextual SEO Decision System (CSDS) becomes powerful only when it’s operationalized.

These steps show how to use CSDS to turn any audit into a contextual roadmap.

Each step expands your clarity.

Each step reduces noise.

Each step brings you closer to the issues that actually move rankings.

Step 1 — Map Each Issue to Search Intent

Intent is the anchor.

If an issue doesn’t influence how the page satisfies the searcher’s goal, it should not receive high priority—no matter how “critical” the audit labels it.

How to map intent quickly:

For each page, ask:

- What is the primary task the user must complete?

- What information hierarchy supports that task?

- Does the flagged issue interfere with this?

- Would fixing it improve clarity, alignment, or satisfaction?

Example:

- A product page missing structured product data = high intent impact

- A blog post with an oversized image = low intent impact

- A how-to guide with shallow steps = extremely high intent impact

- Missing meta description on an archive page = irrelevant

This single step cuts 40–60% of audit noise immediately.

Intent filters fake problems from real ones.

Step 2 — Quantify Impact Using Real Metrics

Audit tools show you errors.

They don’t show you consequences.

You need to look at your search data before fixing anything.

Metrics that matter most:

- Impressions: Is Google even showing this page?

- Clicks: Does the page drive meaningful traffic?

- CTR: Does the content match expectations?

- Average Position: Is the page close to breakout improvements (positions 8–20)?

- Conversion Pathways: Does this page assist revenue or conversions?

- Internal Link Influence: Does this page pass authority to key URLs?

Impact Rule:

A medium-severity issue on a high-impact page > a high-severity issue on a low-impact page.

Audit tools cannot calculate value.

Metrics do.

Impact narrows your focus from “everything wrong” to “what’s wrong where it matters.”

Step 3 — Validate Severity in Context, Not Isolation

Audit tools isolate issues.

CSDS evaluates them in the broader system.

We use a simple, reliable equation:

Severity = Impact × Visibility × Frequency

- Impact: How much does this issue affect user or search understanding?

- Visibility: Does it occur on traffic-driving pages or hidden pages?

- Frequency: Does it appear once or across an entire cluster/template?

This model gives you a more accurate sense of urgency than any tool.

Example:

- Thin content on a single high-conversion page → High severity

- Poor heading structure across 60 pages that get no impressions → Low severity

- Slow LCP on the top 3 commercial pages → Critical severity

Context changes everything.

Severity becomes a multiplier, not a label.

Step 4 — Benchmark Against Competitors

An SEO issue only matters if it affects your ability to outperform competitors.

Competitive Benchmarking Questions:

- Are top-ranking competitors solving this issue better?

- Does the issue contribute to their advantage?

- Are they using schema, structure, or depth that you lack?

- Are they loading faster at meaningful friction points?

- Do they organize content or clusters more clearly?

If the entire SERP “fails” an audit check, the issue is non-differentiating.

If the SERP excels at something you ignore, the issue becomes high leverage.

This is where most audit-based SEO breaks.

You fix what your competitors also ignore, instead of what your competitors use to win.

Step 5 — Prioritize Using Cost-to-Value Ratio

Every company has limited time, budget, and engineering capacity.

SEO without prioritization becomes noise-driven busywork.

To apply cost-to-value:

Classify each issue into one of four quadrants:

Value | Cost | Priority |

High | Low | Top priority → act immediately |

High | High | Evaluate → consider ROI, phased approach |

Low | Low | Batch and fix later |

Low | High | Ignore or de-prioritize indefinitely |

Examples:

- Updating obsolete content on a high-intent page → High value / low cost

- Rewriting the entire template for minor accessibility warnings → Low value / high cost

- Reorganizing internal links to strengthen a cluster → High value / high cost

- Removing unused CSS sitewide → Low value / medium-high cost

Step 6 — Convert Insights into a Real Roadmap

Once you’ve applied intent, impact, severity, competitive delta, and cost-to-value, you can build a real SEO roadmap.

Roadmap Structure:

- Cluster issues by theme:

- Content

- Technical

- UX

- Architecture

- Internal links

- Group issues by page types and templates.

- Assign high-impact items to the next sprint.

- Schedule medium-impact items into quarterly cycles.

- Ignore low-value tasks permanently.

The Output:

Instead of a list of 100 “problems,” you end up with 5–12 high-value actions that genuinely affect rankings.

This is when SEO moves from cleanup to growth.

Examples

Examples make the context-first model tangible.

Here are three high-clarity scenarios showing how score-based SEO fails and context-based SEO wins.

Example 1 — A Score Rises, but Rankings Don’t

A B2B SaaS company improved its audit score from 52 to 88 after fixing dozens of tool-flagged issues:

- compressed images

- resolved accessibility warnings

- cleaned up unused CSS

- added missing alt text

- reduced DOM size

- removed duplicated H1 tags

- fixed minor 404s

The score improved by 36 points.

Traffic didn’t move.

Rankings stayed flat.

Impressions were unchanged.

Why?

Because the real issues weren’t flagged by the audit:

- thin content on core money pages

- outdated feature descriptions

- mismatched headings with commercial intent

- weak internal link distribution

- insufficient topical depth in their main cluster

This is the cost of chasing green lights.

You polish the frontend while the backend of your strategy remains broken.

Example 2 — Fixing Just 5 Contextual Issues → +42% Clicks

A publisher focused on five context-first improvements:

- rewrote a thin high-impression article

- reorganized headers around user intent

- added missing subtopic depth (FAQs, comparisons, clarifications)

- tightened internal linking to anchor cluster pages

- updated stale examples and data

No technical cleanup.

No template rewrites.

No CSS reductions.

No “score improvements.”

Results:

+42% clicks in 60 days

+29% impressions

+18% average position lift across a cluster

This result came from contextual triage, not technical polishing.

Example 3 — Score 95/100 vs Score 60/100

Two pages compete in the same SERP:

- The 95/100 page is technically pristine—fast, clean, polished.

- The 60/100 page is technically imperfect.

The 60/100 page outranks the 95/100 page.

Why?

Because the 60/100 page:

- matches search intent better

- organizes content with clearer hierarchy

- provides deeper, more satisfying coverage

- uses meaningful subtopics

- responds to real user behavior patterns

The audit tool can’t “see” this.

Google can.

Users can.

This is the core message:

Search does not reward technical perfection.

Search rewards contextual alignment.

The Contextual SEO Priority

Audit tools give you scores.

CSDS gives you decisions.

To help you operationalize that shift, here is the Contextual SEO Priority Toolkit — a compact system that turns any audit into a contextual action plan in under 30 minutes.

1. The Context Evaluation Grid

Use this 5-signal grid to evaluate any issue flagged by an audit:

Rule:

If an issue checks 3 out of 5 signals, it becomes contextual priority.

If it checks 0–1 signals, it becomes noise.

2. Contextual SEO Action Pyramid

Prioritize fixes using this simple structure:

Level 1 — High-Leverage Fixes

(Do immediately)

- Intent mismatch

- Thin high-intent content

- Confusing header structure

- Missing subtopic coverage

- Internal link dilution

- Broken flows affecting UX

- Outdated high-visibility content

Level 2 — Situational Fixes

(Do after evaluation)

- Schema gaps

- Competitor-driven enhancements

- Cluster-level improvements

- Template-level UX corrections

- Moderate technical debt with observable impact

Level 3 — Cosmetic Fixes

(Only fix if bundled or low-cost)

- Decorative alt text

- CSS cleanup

- Non-blocking accessibility alerts

- Duplicated H1s in archive pages

- Minor performance optimizations below the fold

Level 4 — Ignore Indefinitely

(Do not invest time)

- Issues with zero contextual weight

- Issues with no user impact

- Issues with no competitive relevance

- Issues requiring high cost for negligible returns

This pyramid eliminates >60% of low-value tasks.

3. The 7-Question Priority Checklist

Ask these before fixing anything:

- Does this issue affect a page that already receives traffic or impressions?

- Does it improve how well the page satisfies search intent?

- Does it influence user behavior (scroll depth, clarity, trust, UX)?

- Does it move us closer to or above our competitors?

- Is this issue aligned with business-critical page types (money pages, hubs)?

- Can the fix scale across clusters or templates?

- Is the value worth the engineering/editorial cost?

If you answer “yes” to 4 or more, fix it.

If “yes” to 1 or fewer, deprioritize it.

4. The 10-Minute Roadmap Builder

After evaluating an audit:

- Identify high-impact clusters

- Apply CSDS signals to each issue

- Move low-value issues to backlog or ignore list

- Group remaining issues into 3 sprints

- Assign technical vs editorial owners

- Track outcomes in GSC (impressions, CTR, coverage, ranking shifts)

This turns long audits into lean action plans.

5. What Changes When You Use This Toolkit

You stop chasing green lights.

You stop relying on vanity metrics.

You stop wasting engineering cycles.

Instead, you:

- fix less → gain more

- prioritize better → rank faster

- align with user behavior → satisfy intent

- work like the algorithm → not like the audit tool

This is how you move from SEO cleanup to SEO performance.

If this framework helped you rethink how you approach SEO, you’ll find even more value in the systems I share each week:

- contextual SEO workflows

- clustering models

- AI-assisted editorial systems

- advanced internal linking structures

- deep competitive analysis blueprints

Follow for more systems, frameworks, and actionable SEO workflows that replace noise with clarity.

Mayank Ranjan

Mayank Ranjan is a digital marketing strategist and content creator with a strong passion for writing and simplifying complex ideas. With 7+ years of experience, he blends AI-powered tools with smart content marketing strategies to help brands grow faster and smarter.

Known for turning ideas into actionable frameworks, Mayank writes about AI in marketing, content systems, and personal branding on his blog, ranjanmayank.in, where he empowers professionals and creators to build meaningful digital presence through words that work.