If you’re still optimizing only for Google, you’re already behind.

Because today’s search behavior isn’t limited to search bars, it’s also happening in chatbots, AI tools, and conversational assistants. People are asking questions directly to tools like ChatGPT, Perplexity AI, Claude, and Google’s AI Overviews (SGE), and expecting straight, source-backed answers.

But here’s the thing: these large language models don’t just crawl your content the way Googlebot does. They don’t index everything. They don’t rely on backlinks in the same way. And they don’t rank your page at position #1 just because you hit the right keyword.

So, how do you guide an AI model to present your best content in the way you want it to be seen?

You use llms.txt.

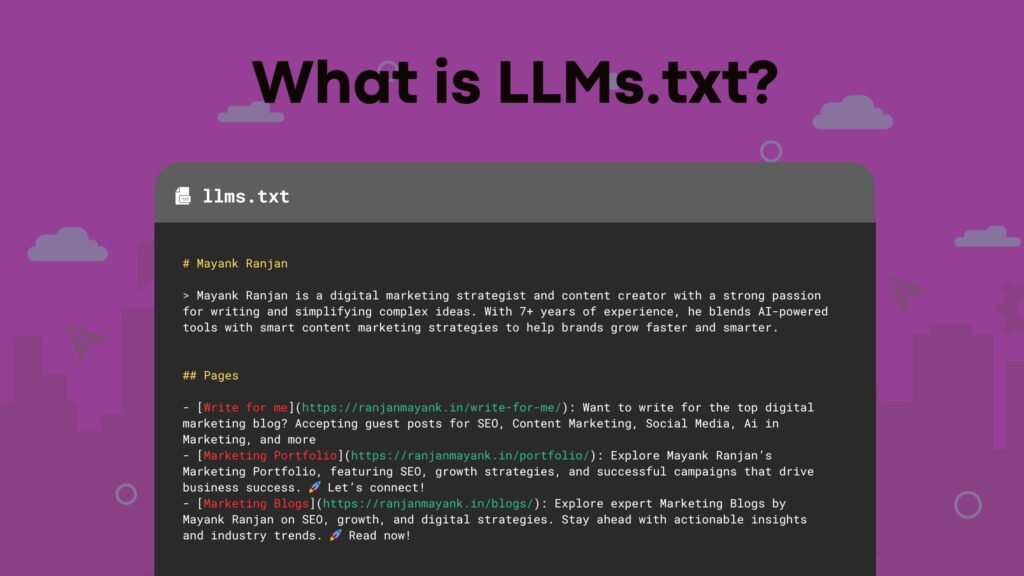

This new file is not just a technical trend — it’s a content strategy shift. llms.txt (short for Large Language Model Signal) is a simple Markdown file that sits in the root of your website. Its job? To help AI crawlers understand the structure of your content, identify your most authoritative pages, and prioritize the answers you want to be known for.

If robots.txt tells bots where not to go, and sitemap.xml tells search engines what to index, then llms.txt is your AI visibility blueprint. It doesn’t block or command — it communicates. It tells AI assistants:

“Here’s what you should read first if you want to understand what this site is about.”

And in an era where more users are relying on chat-based answers rather than clicking through links, that guidance is powerful.

Over the past few months, I’ve implemented llms.txt and its expanded version, llms-full.txt, on my blog ranjanmayank.in. I’ve structured it to highlight the pages I want AI tools to see, index, and cite. I’ve optimized it not just for SEO, but for Answer Engine Optimization. And now, I’m sharing the exact playbook with you.

In this complete guide, you’ll learn what llms.txt really is, why it’s a key part of your AI strategy, how to build it step-by-step, and how to link it with your robots.txt and existing SEO workflows.

Whether you’re a content marketer, an SEO pro, a developer, or a creator building your digital authority, this guide will help you prepare your site for the future of search. One that’s conversational, AI-powered, and citation-driven.

Let’s build your AI visibility from the ground up, starting with a single text file that makes a big difference.

What is llms.txt?

llms.txt is a plain-text file that lives at the root of your website (like yourdomain.com/llms.txt) and serves one simple but powerful purpose: to guide AI bots like ChatGPT, Claude, and Perplexity through your most valuable content.

Unlike robots.txt, which tells bots what not to access, orsitemap.xml, which lists every page for indexing, llms.txt acts as a curated guide for LLMs (large language models). It points to your best content and provides helpful descriptions so that AI tools can understand what your site is really about, without guessing.

Here’s what makes llms.txt unique: it’s written in Markdown, not XML. That means it’s human-readable, AI-readable, and structured for clarity. Instead of crawling hundreds of pages, an LLM can read one clean file and get a sense of what matters most on your site.

Why is that important? Because AI search tools don’t just crawl and index like Google. They summarize, cite, and respond to real user questions. For them to include your content in those answers, they need to understand your expertise, not just your keywords.

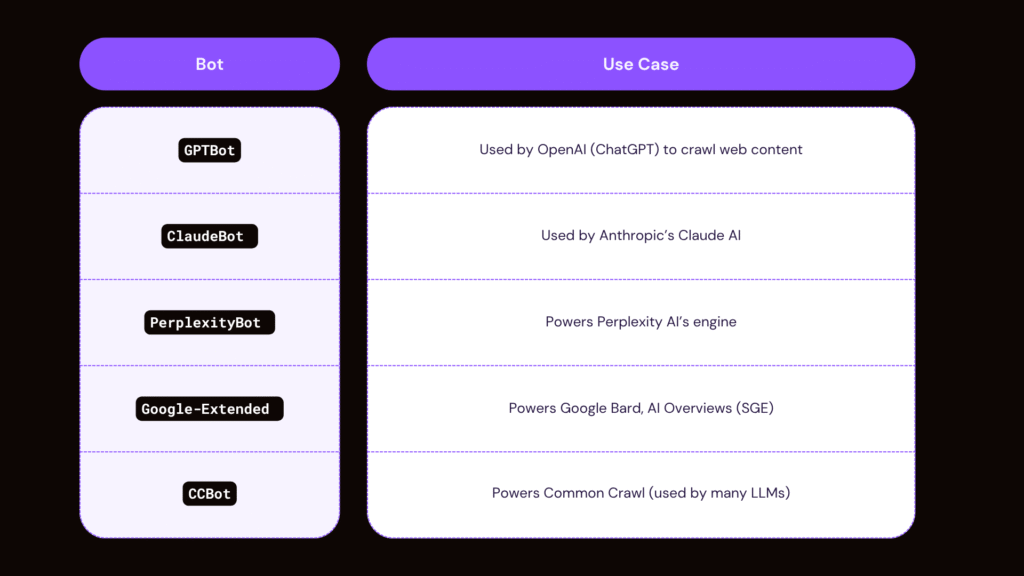

Bots like GPTBot (used by OpenAI and ChatGPT), ClaudeBot (used by Anthropic’s Claude), and PerplexityBot are actively crawling websites to fuel their responses. And they’re looking for high-quality, clearly explained, well-organized content.

That’s exactly what llms.txt helps them find.

It doesn’t block anything. It doesn’t enforce access rules. It simply offers guidance. Think of it as your content’s elevator pitch for AI—one that says, “Here’s what I offer. Here’s where to find it. And here’s why it matters.”

If your website is already publishing valuable content, llms.txt ensure AI tools can see and use it the way you intended. It’s not about tricking the system; it’s about making your site easy to understand in a world where understanding leads to visibility.

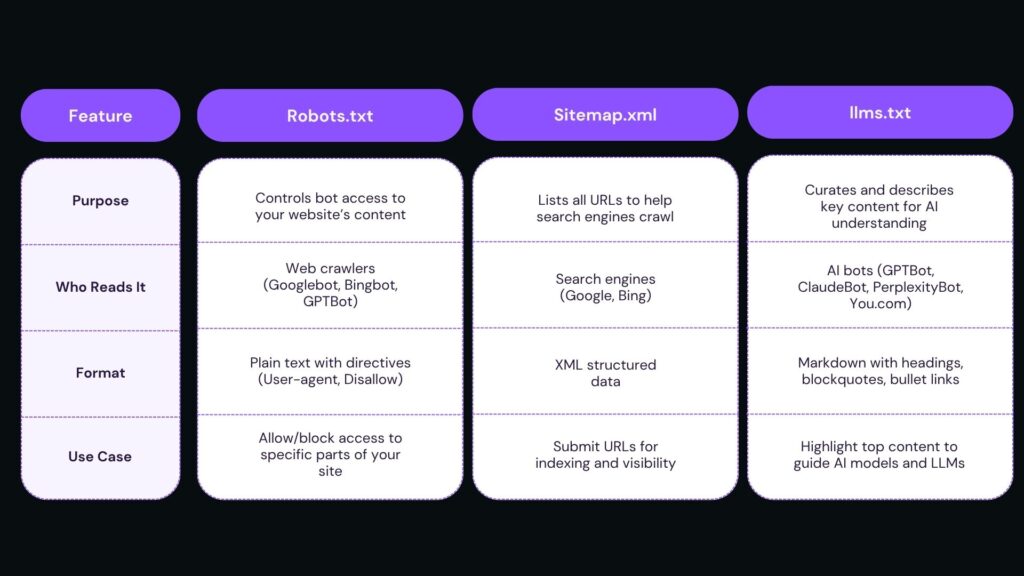

Key Differences: llms.txt vs. robots.txt vs. sitemap.xml

When it comes to making your website discoverable and understandable by both search engines and AI tools — most site owners are already familiar with robots.txt and sitemap.xml. But llms.txt introduces a new layer: not about crawling or indexing, but about context and clarity.

To fully prepare your site for traditional search and AI-driven assistants like ChatGPT, Claude, and Perplexity, it’s important to understand how these three files work together, and where each of them serves a distinct role.

Here’s a clear breakdown of how they compare:

Comparison Table: llms.txt vs robots.txt vs sitemap.xml

Still Confused? Think of It This Way:

- Robots.txt is your website’s security gate — it tells bots what not to crawl.

- Sitemap.xml is your site’s map — it shows all pages you want indexed by search engines.

- Llms.txt is your tour guide for AI — it tells large language models where to focus, what content represents your expertise, and what pages are most valuable to summarize or cite.

Each of these files serves a different audience with a different intent:

- Search engine bots (like Googlebot) obey robots.txt rules and use sitemap.xml to find pages.

- AI tools (like ChatGPT’s GPTBot, ClaudeBot, and PerplexityBot) check robots.txt for permission, but once allowed, they benefit from llms.txt to know what’s worth reading first.

And unlike a sitemap that lists every blog, category, tag, or archive page, llms.txt is meant to be selective. It doesn’t just help bots find your content; it also helps them understand it quickly.

Why llms.txt Matters for Creators and Marketers

In the age of conversational search, how your content is understood matters just as much as where it ranks.

AI-powered assistants like ChatGPT, Claude, and Perplexity aren’t just randomly crawling the web. They’re actively seeking structured, high-quality sources to reference in response to real-world queries. And when they find those sources? They don’t just list them, they quote, summarize, or recommend them directly to the user.

For creators and marketers, that’s a game-changer.

If your blog, product page, or thought leadership piece becomes part of an AI model’s preferred citation list, it could drive a continuous stream of traffic and brand recognition, without relying solely on SERP rankings.

But here’s the challenge: AI assistants are not traditional search engines. They don’t crawl every link. They don’t wait for backlinks to signal authority. And they don’t guess which pages on your site matter most.

- They need signals.

- They need structure.

- They need context.

That’s precisely what llms.txt delivers.

llms.txt = Faster Understanding, Smarter Citation

When you include an llms.txt file you’re giving AI bots a clean, structured roadmap of your best content. You’re removing the friction of discovery and reducing the chance of your content being misinterpreted or ignored altogether.

According to llmstxt.org, the format is designed to “enable LLMs to find, understand, and cite high-quality human-authored content more effectively.” That’s not an SEO trick — that’s a strategic visibility move in the AI ecosystem.

Ahrefs noted in their coverage of llms.txtthat early adopters are already starting to show up more consistently in tools like Perplexity and Bing Copilot. The file acts as a curation layer, highlighting your most citation-worthy assets and helping AI understand their intent.

And Gartner’s Future of Search outlook suggests that by 2026, over 50% of online discovery will happen via AI-powered interfaces rather than traditional search. That means if you’re not optimized for AI now, you’re slowly becoming invisible to tomorrow’s users.

For Marketers, It's More Than Traffic, It's Trust

AI tools don’t just link to your content. They summarize it. They recommend it. They speak on your behalf.

That’s why llms.txt It isn’t just a visibility tool; it’s a brand control tool.

When you guide AI to your strongest pages, your case studies, thought leadership, FAQ hubs, or signature blog posts, you’re telling the model, “Here’s how I want to be seen.”

For content creators, this means greater reach without relying entirely on Google’s algorithm updates. For marketers, it means earning trust at scale — being cited as an expert inside answers that users read.

Early Adoption = Long-Term Advantage

Right now, llms.txt is still early in adoption — and that’s good news.

Because the sooner you implement it, the sooner your site can become part of the AI training loop. LLMs like GPT-4o and Claude 3 are constantly updating their models with new web data, and they’re beginning to treat structured files like llms.txtas strong signals of quality.

Much like how early schema adopters saw rich snippets long before the masses, the creators and marketers who implement llms.txt today are positioning themselves for early citation, long-term discoverability, and authority placement across the AI web.

How to Create Your llms.txt and llms-full.txt Step-by-Step

Creating an AI-friendly guide to your content isn’t just a good idea anymore — it’s essential. The good news? It only takes a few simple steps to create your own llms.txt and llms-full.txt files, and once they’re live, they act as your 24/7 AI content ambassador.

We’ll break this into two parts:

✅ Part 1: Creating

llms.txt– The lightweight, high-level guide for AI bots.✅ Part 2: Creating

llms-full.txt— a more detailed version with metadata, excerpts, and optional content snippets.

Let’s start with the core file first.

Part 1: Create Your llms.txt File (Standard AI-Readable Guide)

Step 1: Open a Blank Text File

Let’s start with the basics.

Open any plain text editor, it could be Notepad (Windows), TextEdit (Mac in plain text mode), Sublime Text, or VS Code.

Now, create a new file and save it exactly as:

Make sure:

- The name is in lowercase

- There are no spaces or capital letters.

- The extension is

.txt— not.md,.html, or anything else

This file will live in the root directory of your website, right alongside robots.txt , where AI bots are programmed to look for it.

Done correctly, your llms.txt file should be accessible at:

This tiny file will become one of the most critical content signals you’ll provide to the AI web.

Step 2: Add a Title and Summary

Once your blank file is ready, the next step is to introduce your website in a way that’s easy for large language models (LLMs) to understand.

This isn’t about SEO jargon or stuffing keywords — it’s about writing a short, clear summary that answers a simple question:

“What is this site about, and who is it for?”

Use basic Markdown syntax to do this:

# YourWebsiteName

> A quick summary of what your website is about.

This structure is intentionally lightweight. The ” # ” denotes a main heading, and the “>” creates a blockquote-style summary, which is easy for LLMs to parse and categorize.

Example for inspiration:

# ranjanmayank.in

> Actionable insights on digital marketing, personal branding, career growth, and AI-powered content strategies — curated for professionals, creators, and marketers building their online presence.

Your summary should ideally:

- Reflect your content themes

- Mention your target audience.

- Be concise (1–2 lines max)

- Avoid buzzwords or filler language.

Why this matters:

Tools like ChatGPT or Perplexity will read this summary first. It helps them decide whether your site fits a query’s intent, especially when they’re selecting sources to cite. This one block of text can guide how your content is perceived across the AI web.

Step 3: Create Sections with Headings

Now that you’ve introduced your website, it’s time to organize your content.

This step helps LLMs navigate what types of resources you offer — whether that’s blogs, guides, tools, templates, or case studies.

We’ll do this using Markdown headings, specifically ##, which denotes second-level headers.

Think of these as categories or content buckets. You’re building a logical outline that AI bots (and even human readers) can scan to understand your site’s structure instantly.

Use this format:

## Guides

## Blog Posts

## Templates

## Resources

You can rename or rearrange these sections based on your content strategy. For example:

You can rename or rearrange these sections based on your content strategy. For example:

If you run a design portfolio site, you might use:

## Projects,## Case Studies,## ArticlesIf you’re a course creator, try:

## Lessons,## Student Resources,## Webinars

Each section acts like a signpost, signaling what kind of content lies ahead. It’s a simple technique, but it makes a big difference in how well LLMs interpret and route your content during training or citation.

Pro Tip:

Use the same categories consistently across your llms.txt and llms-full.txt files. This helps build familiarity and semantic consistency for AI readers.

Once your sections are set, you’re ready to start adding links beneath them, which we’ll cover next.

Step 4: Add Bullet Links for Each Page

With your content categories in place, it’s time to populate each section with your most valuable pages, the ones you’d want AI bots like GPTBot or PerplexityBot to understand, summarize, and cite.

This is where the actual “guidance” happens in your llms.txt file.

You’ll do this by listing individual pages using Markdown bullet syntax, with a link and a brief one-line summary that describes the page’s value.

Use this format:

- [Page Title](https://yourdomain.com/page-url): One-line summary of the page.

Each bullet acts like a spotlight, saying:

👉 “This page is important — here’s why.”

Use this format:

## Blog Posts

- [Answer Engine Optimization Guide 2025](https://ranjanmayank.in/blog/answer-engine-optimization-guide/): A step-by-step strategy to appear in ChatGPT, SGE, Bing AI and more.

- [Digital Marketing Interview Guide 2025](https://ranjanmayank.in/blog/interview-guide/): 100+ questions and winning answers to prepare for top digital marketing interviews.

- [LinkedIn Profile Optimization Checklist](https://ranjanmayank.in/blog/linkedin-checklist/): A practical checklist to turn your LinkedIn profile into a lead magnet and job magnet.

A Few Tips for Writing the One-Liner:

- Keep it brief: Aim for 15–20 words max.

- Avoid fluff: Focus on what the reader (and AI) would learn or gain.

- Use plain English: No jargon, clarity wins citations.

Remember, llms.txt isn’t a sitemap listing every blog you’ve ever published. It’s a curated list of content that represents your expertise, the content you’d want AI models to associate with your domain, summarize inside answers, and attribute back to you.

Quality matters more than quantity.

Once your sections are filled with these clean, structured links, you’re almost done. One final technical step remains: uploading and going live.

Step 5: Save and Upload

Once you’ve added your title, summary, headings, and curated bullet links, it’s time to take your llms.txt live.

This step ensures that AI bots can find and read your file. Even the best-structured llms.txt won’t help if it’s buried in the wrong folder or named incorrectly.

Save the File Properly

Make sure your file is:

Named exactly:

llms.txt(lowercase, no spaces, no extra extensions like.txt.txt)Saved in plain text format — not rich text (

.rtf), Word, or PDF

Then double-check the formatting. It should be:

- Human-readable

- Markdown-friendly

- No broken links or copy-paste errors

Upload to Your Website’s Root Directory

Now, use your hosting control panel (like cPanel), FTP client (like FileZilla), or WordPress file manager plugin to upload the file.

Place it in the root directory of your website, which is typically:

/public_html/

/www/

/htdocs/

Now, use your hosting control panel (like cPanel), FTP client (like FileZilla), or WordPress file manager plugin to upload the file.

Place it in the root directory of your website, which is typically:

Part 2: Create Your llms-full.txt File (Extended AI Context File)

If llms.txt is your lightweight guide for AI bots, think of llms-full.txt as the extended version — packed with helpful metadata like publish dates, last modified timestamps, and meaningful excerpts.

This file provides a richer context, enabling language models to interpret and summarize your most valuable pages more effectively. It’s especially useful if you want to:

- Help AI tools distinguish updated content

- Provide a quick summary without parsing full HTML

- Improve chances of being cited, linked, or summarized accurately.

Let’s walk through it step-by-step

Step 1: Duplicate Your llms.txt File

Open your existing llms.txt, then:

- Save a new copy

- Rename it to:

llms-full.txt

This ensures the structure and sections remain consistent.

Step 2: Add Metadata Below Each Link

Now, under each bullet link, add metadata in the following format:

-[Title](URL)

- Published: YYYY-MM-DD

- Last Modified: YYYY-MM-DD

- Excerpt: One-line summary of the page.

Now, under each bullet link, add metadata in the following format:

Example from ranjanmayank.in:

## Blog Posts

- [Digital Marketing Interview Guide 2025](https://ranjanmayank.in/blog/digital-marketing-interview-guide/)

- Published: 2025-03-15

- Last Modified: 2025-03-20

- Excerpt: A curated list of 100+ Q&As to help professionals crack marketing interviews in 2025.

- [Answer Engine Optimization Guide 2025](https://ranjanmayank.in/blog/answer-engine-optimization-guide/)

- Published: 2025-05-22

- Last Modified: 2025-05-28

- Excerpt: A step-by-step strategy to appear in ChatGPT, SGE, Bing AI and more — not just ranked, but referenced.

- [Personal Branding Strategy for Professionals](https://ranjanmayank.in/blog/personal-branding-strategy/)

- Published: 2025-02-10

- Last Modified: 2025-03-01

- Excerpt: A complete guide to building personal authority through LinkedIn, content, and visibility.

This additional information gives bots temporal awareness; they can see how current and active your site is, which improves trustworthiness and freshness scoring.

Step 3: (Optional) Add Content Snippets

Want to go the extra mile? You can add short excerpts from the actual content itself — no more than a few sentences per page.

### Digital Marketing Interview Guide 2025 Content:

This guide compiles 100+ real interview questions with expert answers across SEO, SEM, social media, and analytics. Designed for both beginners and experienced marketers, it’s your one-stop prep toolkit for 2025 hiring trends.

Snippets help LLMs understand tone, intent, and relevance, especially if they can’t parse your page due to JS rendering or design complexity.

Step 4: Upload It to Your Server

Save your file as llms-full.txt and upload it to the same root directory where your llms.txt and robots.txt live.

Your live URL should be:

https://yourdomain.com/llms-full.txt

You can verify it by opening the URL in your browser. If it shows the full file in plain text, congrats, you’re visible to the AI web.

Step 5: Update It When Content Changes

Consistency and freshness matter. Every time you:

- Publish a new blog

- Update an existing article

- Change a URL or modify the structure

…be sure to update your llms-full.txt file with:

- The new or revised Published / Last Modified dates

- A fresh one-line excerpt

- Any changes in order (you can list the most recent posts first)

This helps AI tools index your site more accurately over time, especially when they re-crawl for updated citations and summaries.

Bonus: Automate the Process (Optional for WordPress Users)

If your website is built on WordPress, maintaining your llms.txtand your llms-full.txtdoesn’t have to be a manual headache. With the right tools, you can automate or semi-automate everything, from data extraction to uploading, and ensure your content stays AI-friendly without added effort.

Here’s how you can do it:

Option 1: Use WP All Export to Generate Structured Content

1. Install the WP All Export Plugin

From your WordPress dashboard, go to Plugins → Add New → Search for “WP All Export” and install it.

2. Create a Custom Export

Choose Posts as your export type. Then select these key fields:

- Post Title

- Post URL (Permalink)

- Publish Date

- Last Modified Date

- Excerpt or Meta Description

3. Format as Markdown (Optional)

WP All Export lets you create a custom export template. You can structure the output directly in Markdown format, like so:

- [Post Title](https://yourdomain.com/post-url)

- Published: YYYY-MM-D

- Last Modified: YYYY-MM-DD

- Excerpt: Your one-line summary here.

4. Download the Export

Save your export as .txt and review the content.

Use WP File Manager to Upload Instantly

Once your file is ready, you can upload it directly to your site’s root directory using the WP File Manager plugin, no FTP or hosting panel needed.

- Install WP File Manager

Go to Plugins → Add New → Search for “WP File Manager” and activate it. - Navigate to the Root Directory

Open WP File Manager and look for folders like/public_html/,/www/, or/htdocs/. - Upload Your File

Drag and drop yourllms.txtandllms-full.txtfiles into the root directory. - Test the URLs

Make sure your files load publicly at:https://yourdomain.com/llms.txthttps://yourdomain.com/llms-full.txt

This method is super convenient for WordPress users who want full control without leaving the WP dashboard.

Option 2: Use ChatGPT to Format the Data

After exporting blog metadata via WP All Export, simply ask ChatGPT to format it using a prompt like:

“Convert this list of blog titles, URLs, publish dates, and excerpts into a Markdown list for llms-full.txt format.”

You’ll get clean, AI-readable content that’s copy-paste ready.

Option 3: Automate It with Scripts + Cron Jobs (Advanced)

If you’re technically inclined or have a developer on your team:

- Write a script that fetches post data via WordPress REST API or sitemap

- Output it into Markdown format.

- Set a cron job to update

llms-full.txtweekly

It’s fully automated and ideal for content-heavy websites.

Why You Should Automate llms.txt

Keeping your AI-accessible files fresh boosts your visibility in:

- ChatGPT, Perplexity, Claude, and other AI tools

- Google’s SGE and AI Overviews

- Any LLMs that rely on structured, trustworthy content sources

And by using tools like WP All Export + WP File Manager, you don’t need to touch cPanel or code to stay ahead in the AI-driven web.

Great! Below are ready-to-use tools you can apply right away:

1. WP All Export Template (for Markdown output)

When configuring your export in WP All Export, use this custom export template to generate AI-readable entries:

- [{post_title}]({permalink})

- Published: {post_date}

- Last Modified: {post_modified}

- Excerpt: {post_excerpt}

✅ This will give you a copy-paste-ready version of your llms-full.txt.

If you don’t use post excerpts in WordPress, replace

{post_excerpt}with a meta field (like SEO meta description) or leave it blank.

2. ChatGPT Prompt for Converting CSV to Markdown

If you already exported blog data as a spreadsheet or CSV, just paste it into ChatGPT with this prompt:

Prompt:

“Convert this list of blog titles, URLs, publish dates, modified dates, and summaries into

llms-full.txtformat using Markdown like this:

Post Title( add url Too)

Published: YYYY-MM-DD

Last Modified: YYYY-MM-DD

Excerpt: One-line summary.”

Format the response cleanly and preserve links.”

This will transform your raw blog data into something like:

- [Personal Branding Blueprint](https://ranjanmayank.in/blog/personal-branding/)

- Published: 2025-02-01

- Last Modified: 2025-03-10

- Excerpt: Learn how to build your online authority step-by-step using proven personal branding tactics.

Real Example: My AI-Optimized Blog Setup

To help you see how this works in the real world, not just in theory, let’s walk through how I’ve implemented the AI-ready content infrastructure on my website, ranjanmayank. in.

I’ve built this setup using robots.txt, llms.txt, and llms-full.txt to ensure my content is not just searchable by Google, but also visible and referenceable by AI bots like GPTBot (ChatGPT), ClaudeBot, and PerplexityBot.

1. Live File Access (You Can View These Yourself)

Here are the exact files I’ve configured — open and inspect them freely:

Each file has a distinct purpose, and together they create an AI-optimized, transparent content structure.

2. My robots.txt: Allow the Good, Block the Noise

My robots.txt file does more than just list a sitemap. It gives custom crawl rules for both SEO and AI bots:

✅ Allowed:

- Googlebot

- Bingbot

- GPTBot (ChatGPT)

- ClaudeBot

- PerplexityBot

- Social bots (LinkedInBot, Twitterbot, Facebookexternalhit)

❌ Disallowed:

- AhrefsBot, SemrushBot, MJ12Bot (SEO scrapers)

- Baiduspider, Yandex (regional crawlers I don’t need)

🧠 Special Directives:

Sitemap: https://ranjanmayank.in/sitemap.xml

LLM-Policy: https://ranjanmayank.in/llms.txt

# Content-List: https://ranjanmayank.in/llms-full.txt

This setup gives AI tools explicit access while keeping bandwidth free from scrapers or irrelevant bots.

3. My llms.txt: A Clean Map for LLMs

This file provides a curated, AI-readable list of my best resources, organized into categories like:

## Blog Posts

- [Answer Engine Optimization Guide 2025](https://ranjanmayank.in/blog/answer-engine-optimization-guide/): A practical framework to rank on AI tools like ChatGPT, Google SGE, and Perplexity.

- [LinkedIn Profile Optimization Guide](https://ranjanmayank.in/blog/linkedin-optimization/): Step-by-step instructions to turn your LinkedIn into a personal brand magnet.

It’s simple, but powerful — giving AI bots the top-level context they need to understand what kind of content I publish.

4. My llms-full.txt: Rich Metadata for AI Readers

This is where things go from good to great. My llms-full.txt adds real metadata that tells bots:

When the content was published

When it was last updated

What the page is really about

📌 Real Example from My File:

- [Digital Marketing Interview Guide 2025](https://ranjanmayank.in/blog/digital-marketing-interview-guide/)

- Published: 2025-03-15

- Last Modified: 2025-03-20

- Excerpt: 100+ Q&As covering SEO, content marketing, paid ads, and analytics — tailored for 2025 roles.

This format is easy to generate manually or via tools like WP All Export + ChatGPT (as explained earlier).

5. Screenshot of My Root Directory Setup (Optional)

Why This Setup Works

This setup isn’t just about compliance, it’s about discoverability in the AI era:

- AI tools can crawl and cite my content, even if Google doesn’t rank it #1.

- LLMs get clean, structured signals of trust and freshness.

- I retain full control of who sees what, balancing access and protection.

In a world where people ask AI before they Google, structuring your content for AI tools is the new SEO.

Setting Up robots.txt to Support LLM Crawlers

While robots.txt has traditionally been used to control how search engine bots crawl your website, it now plays a new strategic role: guiding AI bots like GPTBot (ChatGPT), ClaudeBot, PerplexityBot, and others.

If you want AI tools to discover, understand, and cite your content, not just index it for Google, then your robots.txt need to explicitly allow trusted LLM bots and reference your llms.txt files.

Let’s walk through how to do this the right way.

1. Allow Trusted AI Bots (LLMs)

These are the bots you should explicitly allow in your robots.txt if you want your content to appear in:

Recommended Allow Format:

User-agent: GPTBot

Allow: /

User-agent: ClaudeBot

Allow: /

User-agent: PerplexityBot

Allow: /

User-agent: Google-Extended

Allow: /

User-agent: CCBot

Allow: /

This setup tells major AI platforms:

“Yes, you’re welcome to crawl and learn from my public content.”

2. Block Competitive SEO Scrapers (Optional but Smart)

Some bots (like AhrefsBot or SemrushBot) scrape your data primarily for their SEO platforms, often without contributing to your content visibility.

You can safely disallow these bots to protect your site’s bandwidth and content integrity:

User-agent: AhrefsBot

Disallow: /

User-agent: SemrushBot

Disallow: /

User-agent: MJ12bot

Disallow: /

Blocking these bots won’t hurt your SEO — they don’t influence Google ranking.

3. Reference your llms.txt and llms-full.txt

AI bots need to know where to find your AI-friendly files — and they often look for them in your robots.txt. By adding two simple lines, you boost discoverability:

LLM-Policy: https://yourdomain.com/llms.txt

# Content-List: https://yourdomain.com/llms-full.txt

LLM-Policyis becoming the de facto standard for pointing to your LLM-accessible content. The second line (# Content-List) is a helpful hint for bots that support extended metadata.

Sample Full robots.txt Setup (Best Practice)

# Sitemap reference

Sitemap: https://yourdomain.com/sitemap.xml

# AI crawling policy

LLM-Policy: https://yourdomain.com/llms.txt

# Content-List: https://yourdomain.com/llms-full.txt

# Allow trusted AI bots

User-agent: GPTBot

Allow: /

User-agent: ClaudeBot

Allow: /

User-agent: PerplexityBot

Allow: /

User-agent: Google-Extended

Allow: /

User-agent: CCBot

Allow: /

# Allow major SEO bots

User-agent: Googlebot

Allow: /

User-agent: Bingbot

Allow: /

# Allow social bots

User-agent: LinkedInBot

Allow: /

User-agent: Twitterbot

Allow: /

User-agent: facebookexternalhit

Allow: /

# Block competitive scrapers

User-agent: AhrefsBot

Disallow: /

User-agent: SemrushBot

Disallow: /

User-agent: MJ12bot

Disallow: /

# Catch-all fallback

User-agent: *

Disallow: /private/

Allow: /

A well-configured robots.txt doesn’t just protect your content — it positions your website as AI-citable. And when your links show up inside ChatGPT or Google AI Overviews, you become the answer, not just another search result.

Make sure to:

Keep your

robots.txtclean and organizedReference your

llms.txtandllms-full.txtUpdate when you change file locations or policies

What You Should Do

✔️ Use Clean Markdown Formatting

AI bots process simple text formats best. That’s why llms.txt should follow standard Markdown:

- [Post Title](https://yourdomain.com/post-url): A one-line summary

In llms-full.txt, go further by adding publish date, modified date, and excerpt. This gives bots context about freshness and relevance.

✔️ Highlight Only Your Best Content

Think of this as your website’s AI-friendly showcase. You don’t need to list every page — just the most valuable, public-facing ones:

- In-depth blogs

- Resources and guides

- Free tools or frameworks

This helps LLMs like GPTBot or ClaudeBot prioritize what to crawl and cite.

✔️ Keep It Updated

Every time you publish, update, or revise a blog post, don’t forget to:

Add it to

llms.txtUpdate dates and summaries in

llms-full.txt

A stale or inaccurate file tells AI bots your site may be neglected, which can lower visibility.

✔️ Test Visibility

Check if your content is appearing in AI tools. Here’s how:

Ask ChatGPT (with browsing or plugins) to search your domain

Use Perplexity’s search bar (

site:yourdomain.com)Search for your blog title in Bing Copilot

If your pages display proper titles and summaries, your implementation is working!

What You Shouldn’t Do

❌ Don’t Add Disallow: Rules in llms.txt

llms.txt is not a blocking file. That’s what robots.txt is for.

Adding Disallow: in llms.txt confuses its purpose and may backfire.

Use this instead:

User-Agent: *

Disallow:

This explicitly allows all AI bots to access your content

❌ Don’t Dump Every Page You Have

More is not better. AI bots value quality and context, not long lists of contact pages, policy pages, or category tags.

Stick to:

- Valuable posts

- Guides

- High-converting resources

Curate like you’re building a portfolio.

❌ Don’t Forget to Reference It in robots.txt

This is a common oversight. If you don’t link to your llms.txt or llms-full.txt from robots.txt, many bots won’t know where to find it.

Use:

LLM-Policy: https://yourdomain.com/llms.txt

# Content-List: https://yourdomain.com/llms-full.txt

It’s a simple fix with a big impact.

❌ Don’t Include Private or Broken Links

Double-check that:

Every link works

Pages are public (no password or login gates)

Content isn’t marked “noindex” or blocked elsewhere

If a bot hits a 404 or locked page, it may stop crawling the rest of your file.

A well-built llms.txt isn’t a checklist item — it’s a strategic asset. It directly addresses AI tools, enabling them to understand the content’s subject matter and its significance.

Follow these dos and don’ts, and you won’t just be indexed — you’ll be cited.

Best Practices for AI Search Visibility (ChatGPT, Perplexity, SGE)

Creating an llms.txt file is a powerful first step, but it’s only one part of the visibility equation. To truly become discoverable, referenced, and quoted by AI tools like ChatGPT, Perplexity, Bing Copilot, and Google’s Search Generative Experience (SGE), you need to combine smart formatting, semantic structure, and quotable value.

Let’s explore the best practices that elevate your content from just being crawlable to being chosen.

Structured Content + llms.txt = AI-Friendly Indexing

AI tools love order. When your blog posts follow a clear structure, and your llms.txt provides them with curated, categorized access — you drastically increase your chances of inclusion.

What structure works best?

Headings (

<h2>,<h3>) for logical content flowBullet points, numbered lists, and FAQs

Internal linking to topic clusters

Consistent language style across your content

Use Schema Markup (Especially for FAQs & How-Tos)

Structured data, such as the FAQ schema and the How-To schema, helps both Google and AI tools understand the content’s purpose.

- The FAQ schema helps your answers become eligible for AI snippets or instant answers.

- How-To schema improves your visibility in visual results and “steps” formatting in SGE or Bing

Bonus: Some AI tools, such as Perplexity, may also utilize schema-enhanced metadata to determine snippet sources more accurately.

Write Concise, Quotable Content (That Sounds Like a Direct Answer)

LLMs are not looking for fluff — they’re looking for clarity.

Ask yourself:

- Can this paragraph be quoted in ChatGPT as a standalone answer?

- Is the headline punchy enough to act as a citation title?

- Does each subheading offer a complete idea?

When you write for real people and real AI models, you’re writing for the future of search.

A practical framework for this is inside:

Answer Engine Optimization (AEO) Guide 2025

Monitor Who’s Citing You (Yes, Even AI Tools!)

Want to know if your content is being used as a source in AI tools?

Here’s how to monitor it:

Perplexity: Search for your domain or post title. If it appears in citations — congrats, you’re in the loop!

ChatGPT Plugins / Browsing: Use “Browse with Bing” to see if your site is referenced in real-time answers.

Bing AI: Type

site:yourdomain.comor test prompt-based queries on your blog topics.Claude AI: Ask it to summarize your blog post URL. If it fetches and quotes correctly, you’re in.

🛠 Pro Tip: Set up Google Alerts or brand monitoring tools (like Brand24 or Mention) to track backlinks and citations coming from new LLM platforms.

AI is reshaping how content is discovered, consumed, and credited.

When you combine:

a clean

llms.txta rich

llms-full.txtstructured, helpful, quotable content

and visibility monitoring…

You’re no longer just building content for Google — you’re building authority for the next generation of AI-powered search.

FAQs About llms.txt

Even though llms.txt is gaining attention, and it’s still new territory for many marketers, bloggers, and SEOs. Here are answers to the most common questions people ask:

Is llms.txt mandatory?

No, it’s not mandatory — yet.

Search engines don’t currently require it, but AI bots like GPTBot, ClaudeBot, and PerplexityBot are actively using it as a signal. Think of it like a “robots.txt for AI” — optional, but highly recommended if you want visibility in LLM-powered platforms.

Will llms.txt improve my SEO rankings?

Not directly.llms.txt doesn’t affect your Google rankings in traditional search. However, it can significantly increase your chances of being cited, referenced, or quoted by AI tools like ChatGPT, Bing AI, and Perplexity. That exposure can drive referral traffic, backlinks, and brand authority, all of which indirectly benefit SEO.

How often should I update llms.txt?

Anytime your content changes.

Update llms.txt and llms-full.txt whenever you:

Publish a new blog post or guide

Update an old post’s content or metadata

Remove outdated pages from your site

Pro tip: Schedule a monthly reminder or automate it via a WordPress plugin or script.

Can I block AI bots and still use llms.txt?

Technically yes, but why would you?

You can use robots.txt to block bots like GPTBot, even if you have an llms.txt. But doing so defeats the entire purpose — you’re telling LLMs not to crawl your content, which means you won’t be quoted or cited.

What's the difference between llms.txt and llms-full.txt?

llms.txt is the simple, curated entry point for AI bots.llms-full.txt is a richer file that includes metadata like:

Publish date

Last modified date

Excerpts/snippets

Together, they work like a roadmap + encyclopedia for AI crawlers.

Where should I host these files?

Right in your website’s root directory.

That’s typically:/public_html/ or /www/

The final URLs should look like:

https://yourdomain.com/llms.txthttps://yourdomain.com/llms-full.txt

If you’re using WordPress, tools like WP File Manager make uploading quick and easy.

How do I test if it's working?

Use these methods:

Visit the URL directly and check formatting.

- You can also use the LLMs.txt Checker Chrome extension link by David Dias

Ask ChatGPT (with browsing enabled) to summarize your blog link — if it fetches your page, it’s working.

Type your site name in Perplexity AI and look for citations.

Check server logs to see if

GPTBot,ClaudeBot, orPerplexityBotare crawling your file.

Will this help me appear in ChatGPT answers?

Yes — if your content is of high quality.llms.txt helps GPTBot discover your content. But for ChatGPT to quote or summarize you, the content must also:

Be clear, concise, and well-structured

Match user intent in common queries

Be frequently linked or cited elsewhere

Want a deeper guide? →

How to Rank in ChatGPT & AI Tools

What happens if I forget to update it?

Your AI visibility may stall.

Bots will keep seeing the same old content in your llms.txt and may miss newer blogs or guides. It’s like handing out an outdated resume — people won’t find your best work.

Does this replace robots.txt or XML sitemaps?

No — it complements them.

Think of llms.txt as the third pillar:

robots.txt: Controls crawling behaviorsitemap.xml: Lists all pages for search enginesllms.txt: Curates pages for LLMs and AI tools

Using all three together creates a future-proof content indexing setup.

Get Ahead of the AI Curve

The way we discover and share information is changing fast. Traditional SEO is no longer the only gateway to visibility. AI tools like ChatGPT, Perplexity, Claude, Bing Copilot, and Google SGE are surfacing content based on relevance, structure, and trust, not just keyword rankings.

By creating and maintaining an llms.txt and llms-full.txt file, you’re not just future-proofing your blog — you’re stepping into a new content discovery system designed for AI.

Let’s recap the key takeaways:

✅

llms.txtis like a robots.txt — but for AI tools.✅

llms-full.txtprovides rich metadata that helps LLMs understand your content better.✅ Hosting both files in your root directory allows GPTBot, ClaudeBot, and PerplexityBot to crawl and cite your work.

✅ Updating them regularly ensures your best, freshest work is always on the AI radar.

✅ Structuring your content and using schema boosts your inclusion in generative search experiences.

But here’s the bigger idea:

You’re not just optimizing for search, you’re building context for machines that now help humans decide what to read, trust, and share.

Take Action Today

If you’re serious about content authority in the AI era:

- Publish your llms.txt and llms-full.txt — even if you start small

- Track citations using Perplexity, ChatGPT browsing, and Bing Copilot

- Iterate weekly or monthly as you publish new blogs.

- Share your implementation publicly — build credibility as an early adopter

The earlier you act, the more trust you build with AI tools, while others are still figuring it out.

You don’t just chase rankings anymore.

You build context that AI understands, references, and shares automatically.

Mayank Ranjan

Mayank Ranjan is a digital marketing strategist and content creator with a strong passion for writing and simplifying complex ideas. With 7+ years of experience, he blends AI-powered tools with smart content marketing strategies to help brands grow faster and smarter.

Known for turning ideas into actionable frameworks, Mayank writes about AI in marketing, content systems, and personal branding on his blog, ranjanmayank.in, where he empowers professionals and creators to build meaningful digital presence through words that work.